Subscribe

With generative AI tools like ChatGPT, GitHub Copilot, and Tabnine flooding the software development space, software developers are quickly adopting these technologies to help automate everyday development tasks. And the use of these AI tools is continuing to expand exponentially, as evidenced by a recent Stack Overflow survey that found an overwhelming 70% of its 89,000 respondents are either currently employing AI tools in their development process or are planning to do so in 2023.

In response to the growing AI landscape, Synopsys is proud to announce a preview of its new AI code analysis API, which enables developers to analyze code generated by AI tools and identify open source snippets and related license and copyright terms. Available via the Synopsys Polaris SaaS platform and powered by Black Duck® snippet analysis, developers simply provide code blocks generated by AI tools and receive feedback regarding whether it matches an open source project, and if so, which license the project is associated with. With this information, teams can have confidence that they are not building and shipping applications that contain someone else’s protected intellectual property.

Synopsys Senior Sales Engineer Frank Tomasello recently hosted a webinar, “Black Duck Snippet Matching and Generative AI Models,” to discuss the rise of AI and how our snippet analysis technology helps protect teams and IP in this uncertain frontier. We touch upon the key webinar takeaways below.

The risks of AI-assisted programming

The good: Fewer resource constraints. The bad: Inherited code with unknown restrictions. The ugly: License conflicts with potential legal implications.

Citing the Stack Overflow survey noted above, Tomasello underscored in the webinar that we are well on our way to adopting an industry-wide shift toward AI-assisted programming. While beneficial from a resource and timing constraint perspective, lazy or insecure use of AI can mean a whole world of trouble.

AI tools like Copilot and ChatGPT function based on learning algorithms that use vast repositories of public and open source code. These models then use the context provided by their users to suggest lines of code to incorporate into proprietary projects. At face value, this is tremendously helpful in speeding up development and minimizing resource limitations. However, given that open source was used to train these tools, it is essential to recognize the possibility that a significant portion of this public code is either copyrighted or subject to more restrictive licensing conditions.

The worst-case scenario is already playing out; earlier this year, GitHub and OpenAI faced groundbreaking class-action lawsuits that claim violations of copyright laws for allowing Copilot and ChatGPT to generate sections of code without providing the necessary credit or attribution to original authors. The fallout from these and inevitable future lawsuits remains to be seen, but the litigation is something that no organization wants to face.

The danger here is therefore not the use of generative AI tools, but the failure to complement their use with tools capable of identifying license conflicts and their potential risk.

The challenge of securing AI-generated code

We’ve seen over and over the outcomes for failing to adhere to license requirements, long before AI: think Cisco Systems v. the Free Software Foundation in 2008, and Artifex Software v. Hancom in 2017. But the risk remains the same; as AI-assisted software development advances, it's becoming ever more crucial for companies to remain vigilant about potential copyright violations and maintain strict compliance with the terms of open source licenses.

Business leaders are concerned with implementing AI guardrails and protections, but they often lack a tactical or sustainable approach. Today, most organizations either ignore security needs entirely or take an unsustainably manual approach. The manual approach involves considerable resourcing to maintain—more people, more money, and more time to complete. With uncertain economic conditions and limited capacity, organizations are struggling to dedicate the necessary effort for this task. In addition, the complexity of license regulations necessitates a level of expertise and training that organizations likely lack.

Further compounding the issue is the element of human error. It would be unrealistic to expect developers to painstakingly investigate every single license that is mapped to every single open source component and successfully identify all associated licenses, especially given the massive scale of open source usage in modern applications.

What is required is an automated solution that goes above and beyond common open source discovery methods to help teams simplify and accelerate the compliance aspect of open source usage.

Black Duck snippet analysis

While most SCA tools parse files generated by package managers to resolve open source dependencies, that’s not sufficient to identify the IP obligations associated with AI-generated code. This code is usually provided in blocks or snippets that will not be recognized by package managers or included in files like package.json or pom.xml. That’s why Black Duck goes several steps further in identifying open source dependencies, including conducting snippet analysis.

Black Duck’s snippet analysis does exactly what its name suggests; it analyses source code, and can match snippets as small as a handful of lines to the open source projects where they originated. As a result, Black Duck can provide customers with the license associated with that project and advise on associated risk and obligations. This is all powered by a KnowledgeBase™ of more than 6 million open source projects and over 2,750 unique open source licenses.

Figure 1. Developers send a POST request to the Black Duck API with the AI-generated snippet in the body.

Figure 2. Developers receive a response containing details about any open source matches and associated license information.

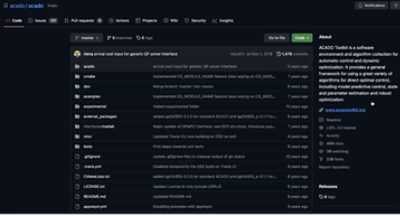

Figure 3. In this example, the snippet was matched to ACADO Toolkit. The link provided in the API response points to the GitHub page for this project.

Figure 4. For this example, the code snippet, provided by GitHub Copilot, is a direct match to the cs_spalloc function of cs_util.c in this project.

Synopsys is now offering a preview of this AI code analysis tool available to the public at no cost. This will enable developers to leverage productivity-boosting AI tools without worrying about violating license terms that other SCA tools might overlook.